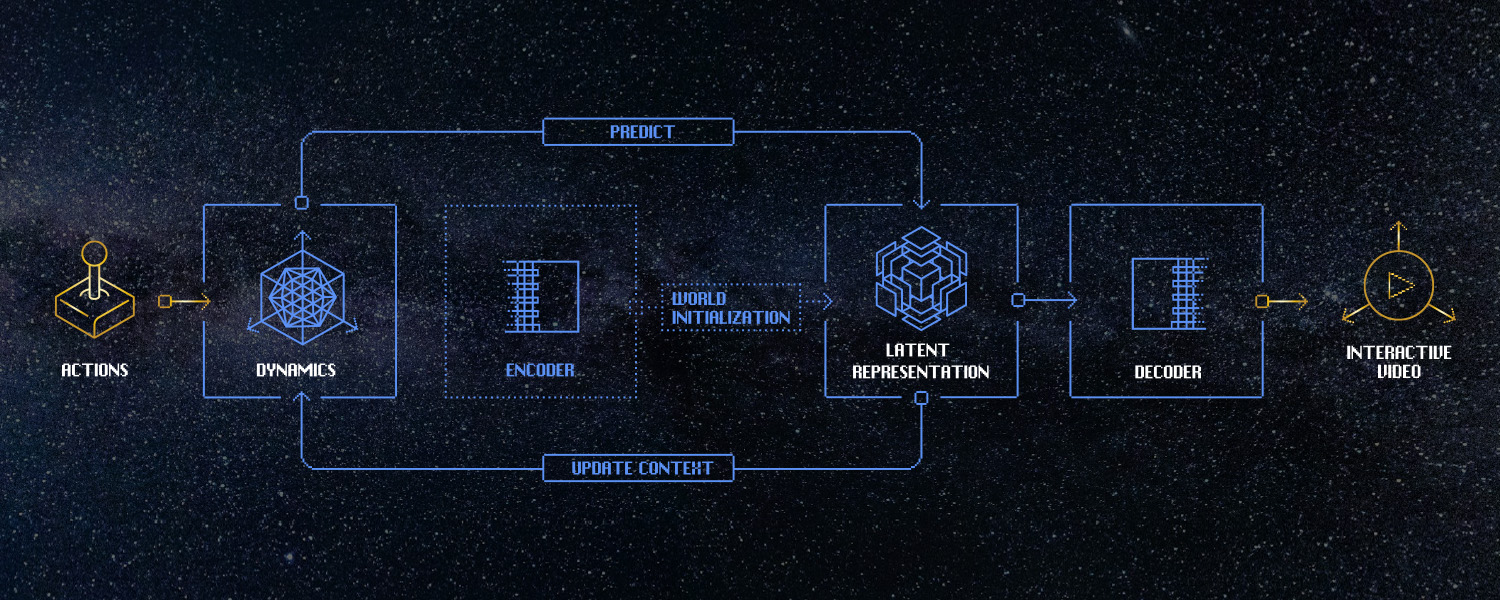

A world model, not a video model

Traditional bidirectional video models today take 1-2 minutes to generate only 5 seconds of footage. Odyssey-2, on the other hand, begins generating and streaming video instantly—producing a new frame of video every 50 milliseconds. As Odyssey-2’s video streams, you can shape it in real time with additional inputs. The result is a continuous stream of video that listens, adapts, and reacts.| World Models | Video Models |

|---|---|

| Predicts one frame at a time, reacting to what happens. | Generates a full video in one go. |

| Every future is possible. | The model knows the end from the start. |

| Fully interactive—responds instantly to user input at any time. | No interactivity—the clip plays out the same every time. |

A face painted

1

Prompt

A close-up portrait of a woman, illuminated by soft, directional lighting. The background is softly blurred. The camera remains steady.

2

Midstream

The woman paints her face with green paint.

A painting come to life

1

Prompt

A close shot of a painter using oil paint to paint a fireplace hearth on canvas.

2

Midstream

The flames painted on the canvas come to life. The canvas is on fire.